At a very small scale and all things considered, a computer “cabinet” that hosts cloud servers and services is a very small data center and is in fact quite similar to large ones for its key components… (to anticipate the comments: we understand that these large ones are of course much more complex, more edgy and hard to “control”, more technical, etc., but again, not so fundamentally different from a conceptual point of view).

Documenting the black box… (or un-blackboxing it?)

You can definitely find similar concepts that are “scalable” between the very small – personal – and the extra large. Therefore the aim of this post, following two previous ones about software (part #1) –with a technical comment here– and hardware (part #2), is to continue document and “reverse engineer” the set up of our own (small size) cloud computing infrastructure and of what we consider as basic key “conceptual” elements of this infrastructure. The ones that we’ll possibly want to reassess and reassemble in a different way or question later during the I&IC research.

However, note that a meaningful difference between the big and the small data center would be that a small one could sit in your own house or small office, or physically find its place within an everyday situation (becoming some piece of mobile furniture? else?) and be administrated by yourself (becoming personal). Besides the fact that our infrastructure offers server-side computing capacities (therefore different than a Networked Attached Storage), this is also a reason why we’ve picked up this type of infrastructure and configuration to work with, instead of a third party API (i.e. Dropbox, Google Drive, etc.) with which we wouldn’t have access to the hardware parts. This system architecture could then possibly be “indefinitely” scaled up by getting connected to similar distant personal clouds in a highly decentralized architecture –like i.e. ownCloud seems now to allow, with its “server to server” sharing capabilities–.

See also the two mentioned related posts:

Setting up our own (small size) personal cloud infrastructure. Part #1, components

Setting up our own (small size) personal cloud infrastructure. Part #2, components

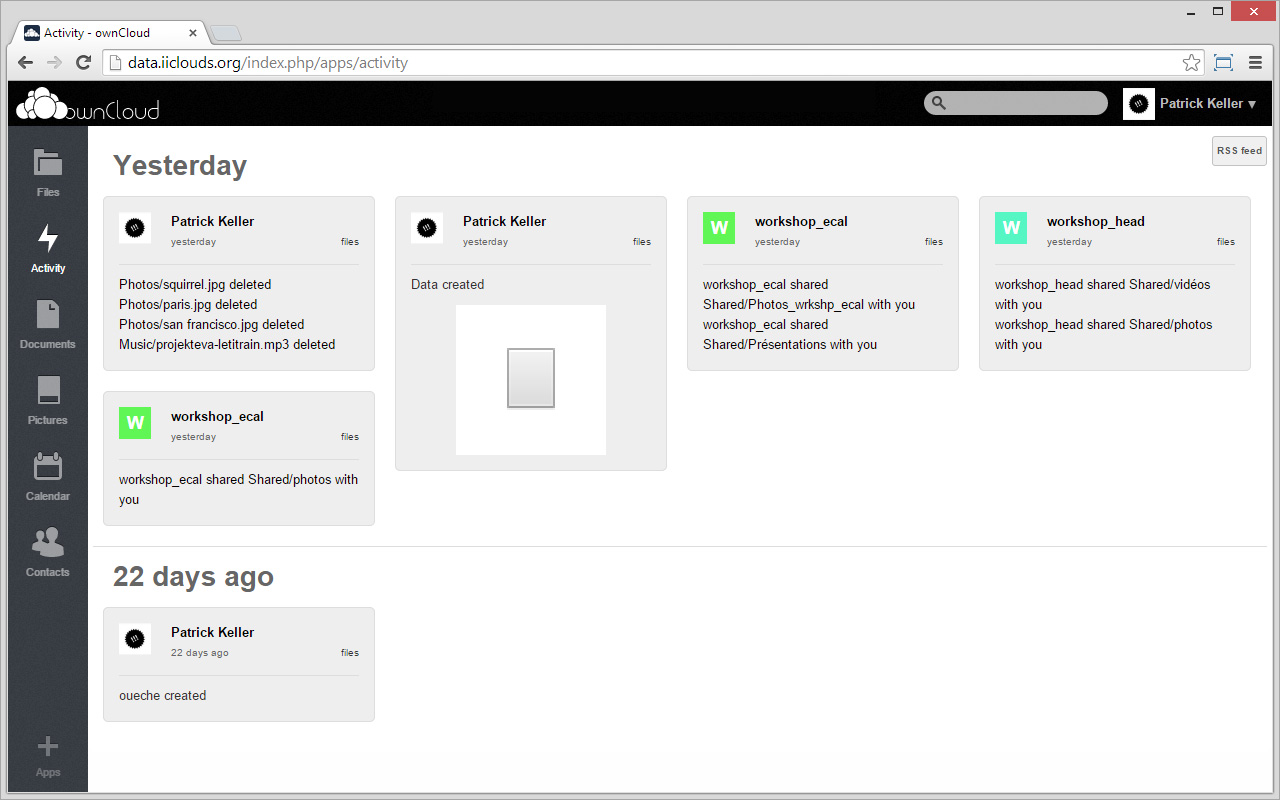

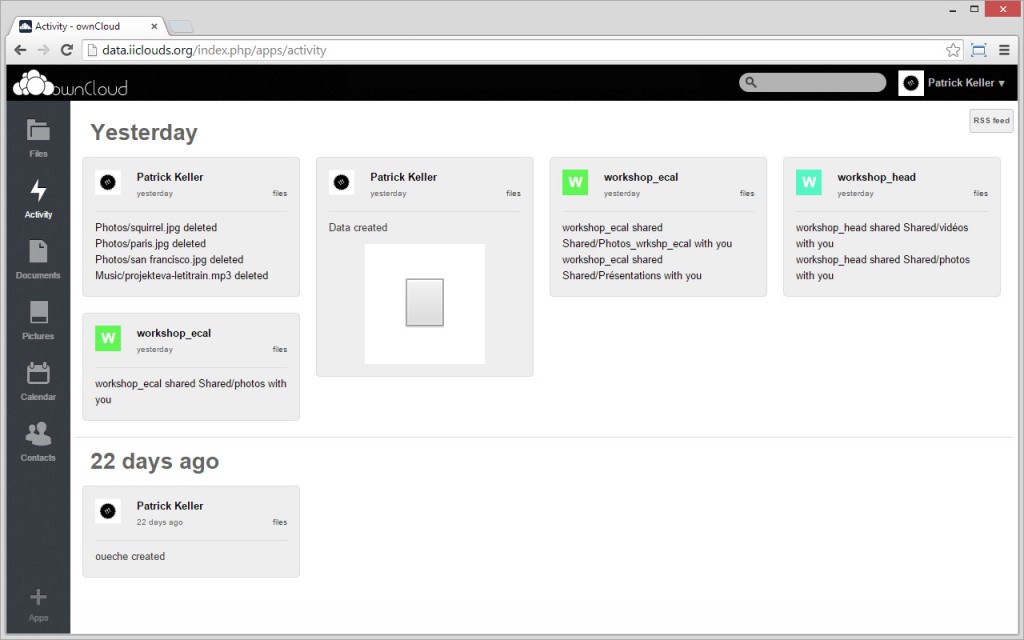

For our own knowledge and desire to better understand, document and share the tools with the community, but also to be able to run this research in a highly decentralized way, so as to keep our research data under our control, we’ve set up our own small “personal cloud” infrastructure. It uses a Linux server and an ownCloud (“data, under your control”) open source software installed on RAID computing and data storage units, within a 19″ computer cabinet. This set up will help us exemplify in this post the basic physical architecture of the system and learn from it. Note that the “Cook Book” for the software set up of a personal cloud similar with ours is accessible here.

Before opening the black box though, which as you can witness has nothing to do with a clear blue sky (see here too), let’s mention one more time this resource that fully documents the creation of open sourced data centers: Open Compute Project (surprisingly initiated by Facebook to celebrate their “hacking background” –as they stated it– and continuously evolving).

So, let’s first access the “secured room” where our server is located, then remove the side doors of the box and open it…

Standardized

This is the server and setup that currently hosts this website/blog and different cloud services that will be used during this research. Its main elements are quite standarized (according to the former EIA standards –now ECIA–, especially norm EIA/ECA 310E).

It is a 19 inches standardized Computer Cabinet, 600 x 800 mm (finished horizontal outside dimensions). Another typical size for a computer cabinet is 23 inches. These dimensions reflect the width of the inner equipment including the holding frame. The heights of our cabinet is middle size and composed of 16 Rack Units (16 U). Very typical size for a computer cabinet is 42U. A rack unit (U) is 1.75 inches (or 4,445 cm). The Rack Unit module defines in return the sizes of the physical material and hardware that can be assembled into the railings. Servers, routers, fans, plugs, etc. and computing parts need therefore to fit into this predefined module and are sized according to “U”s (1U, 2U, 4U, 12U, etc.) in vertical.

-

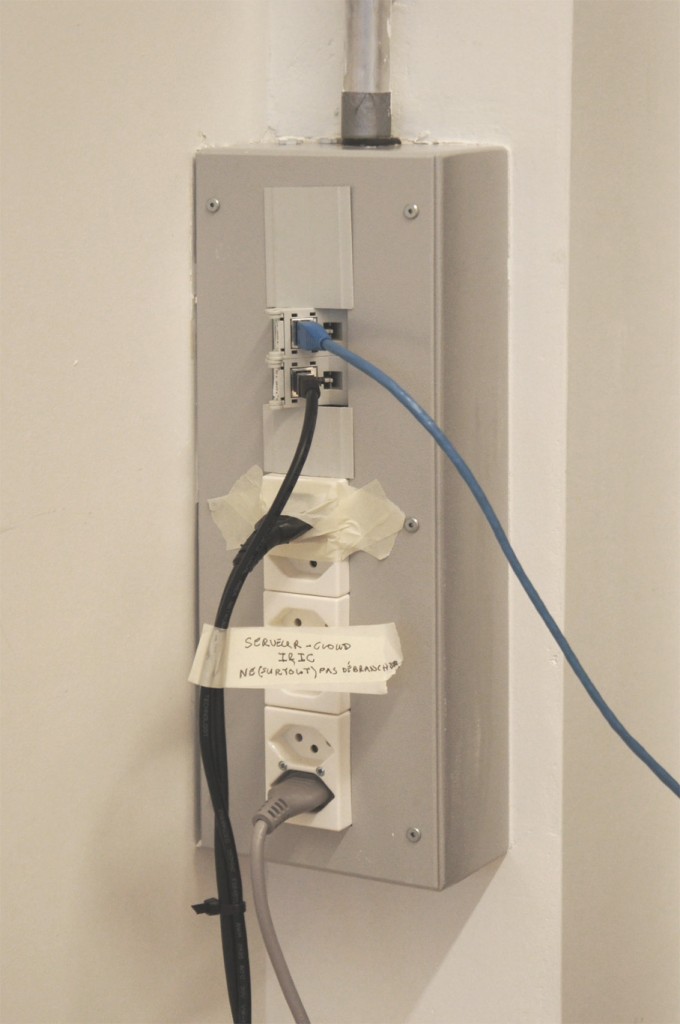

Networked (energy and communication)

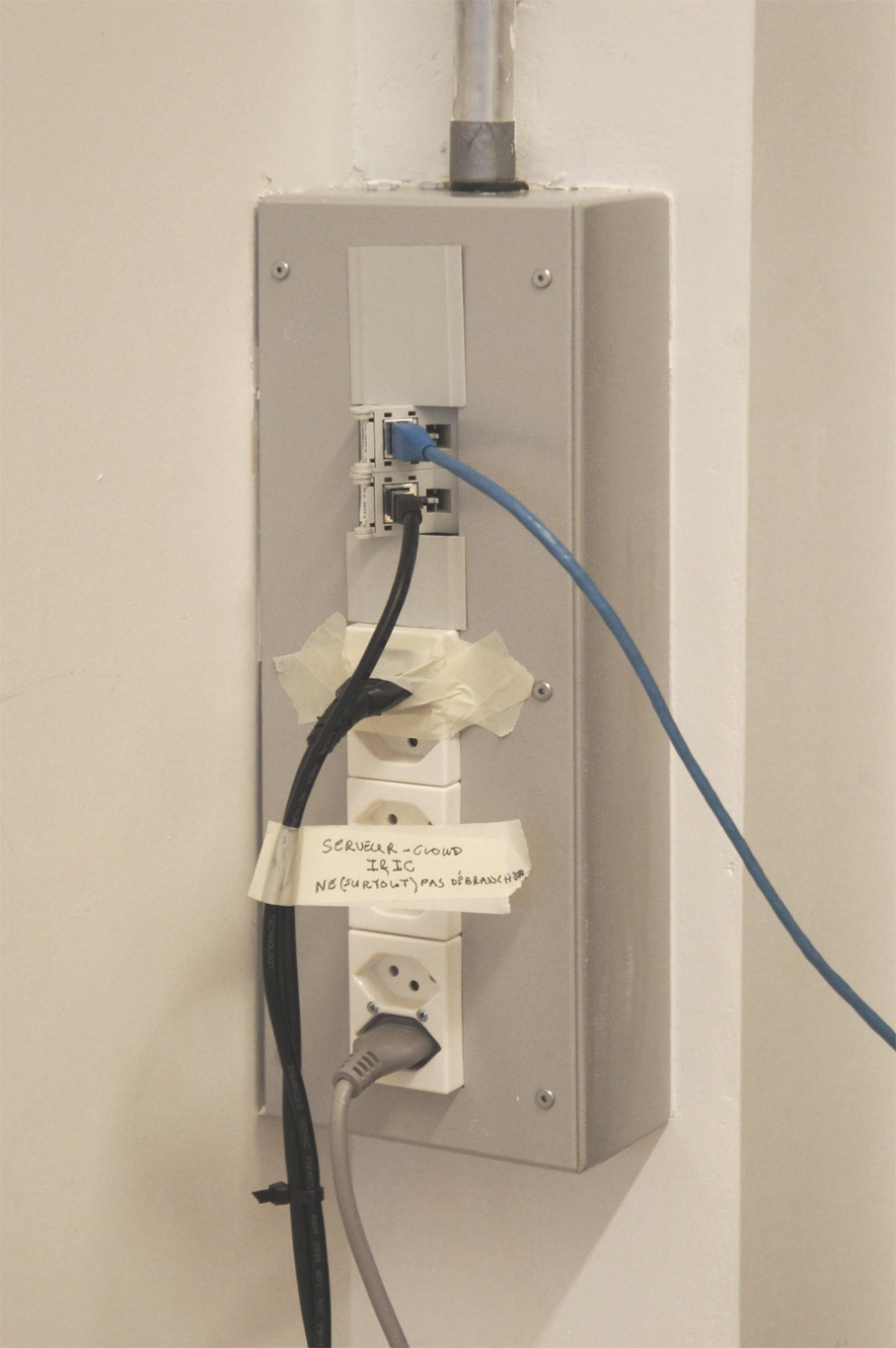

Even if not following the security standards at all in our case… our hardware is nonetheless connected to the energy and communication networks through four plugs (four redundant electric and rj45 plugs).

-

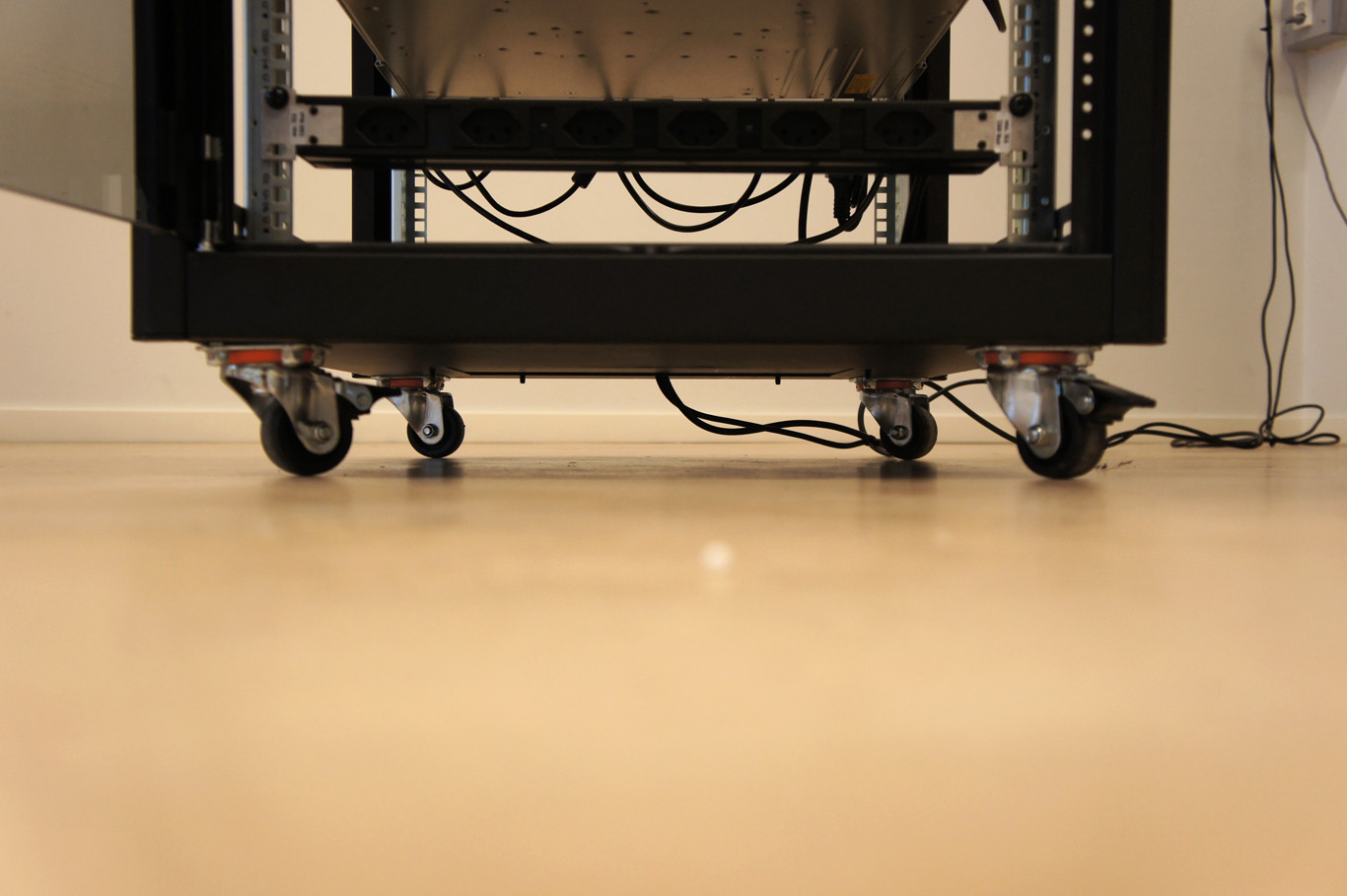

Mobile

Heavy in general (made out of steel… for no precise reasons) the cabinet is usually and nonetheless mobile. It has now become a common product that enters the global chains of goods, so that before you can eventually open it like we just did, it already came into place through container boats, trains, trucks, fork trucks, palets, hands, etc. But not only… once in place, it will still need to be moved, opened, refurbished, displaced, closed, replaced, etc. If you’ve tried to move it by hands once, you won’t like to do it for a second time because of its weight… Once placed in a secured data center (in fact its bigger size casing), metal sides and doors might be removed due to the fact that the building could serve as its new and stronger “sides” (and protect the hardware from heat, dust, electrostatics, physical depredation, etc.)

-

“False”

The computer cabinet (or the data center) usually needs some sort of “false floor” or a “trap” that can be opened and closed regularly for the many cables that need to enter the Cabinet. It needs a “false ceiling” too to handle warmed and dried air –so as additional cabling and pipes– before ejecting it outside. “False floor” and “false ceiling” are usually also used for air flow and cooling needs.

-

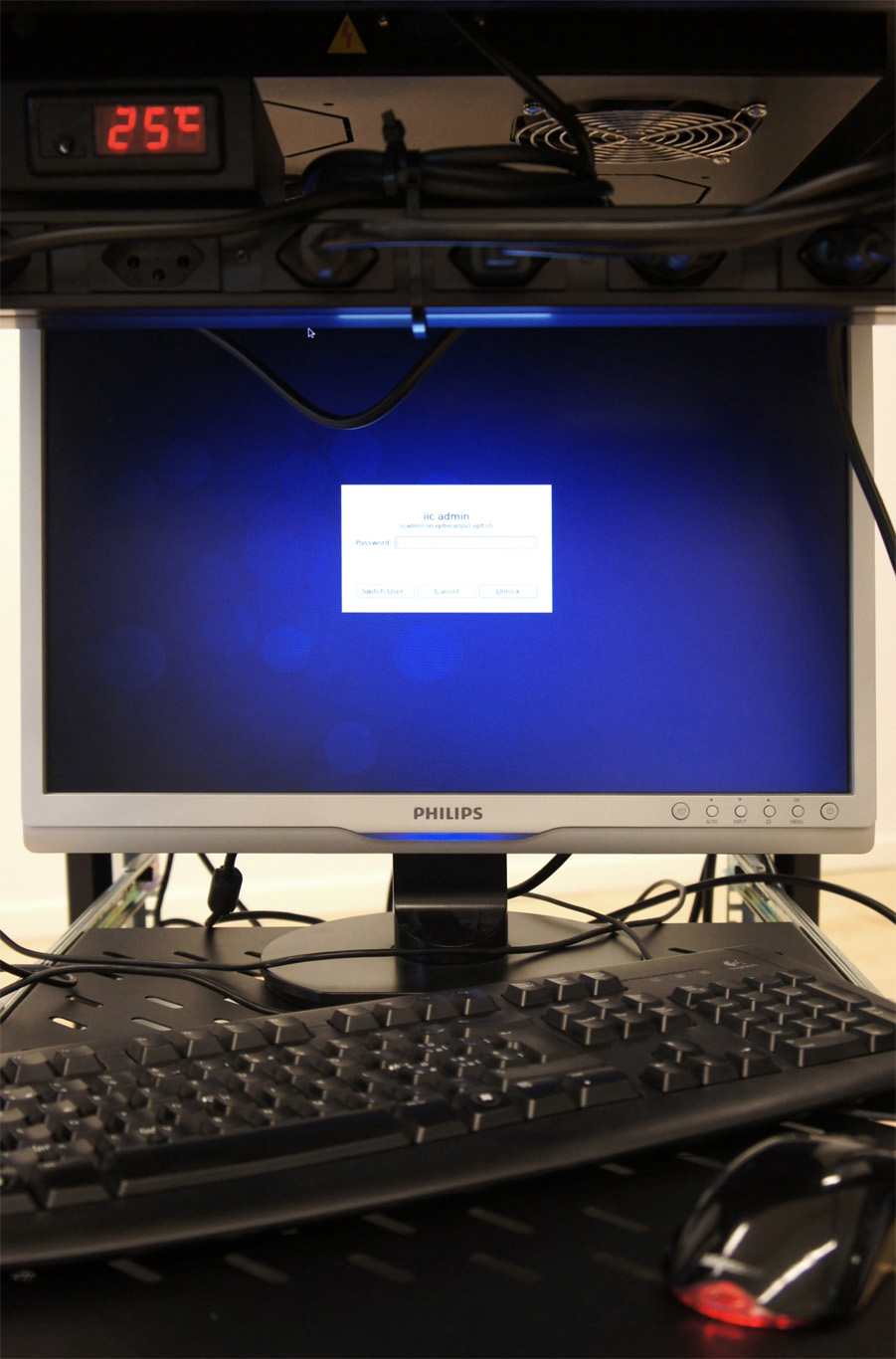

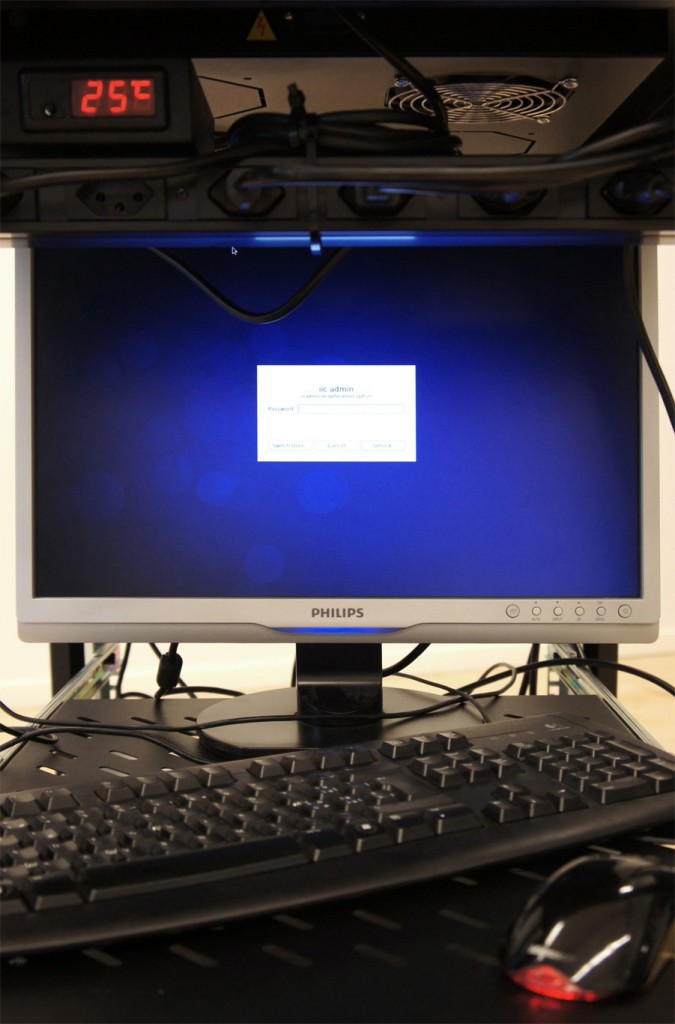

“Porous” and climatically monitored, for facilitated air flow (or any other cooling technology, water through pipes would be better)

Temperature and atmosphere monitoring, air flows, the avoidance of electrostatics are of high importance. Hardware doesn’t support high temperature and either gets down or used more quickly. Air flow must be controlled and facilitated: it gets hot and dry while cooling the machines, it might get charged in positive ions which in turn will attract dust and increase electrostatics. The progressive heating of air triggers its vertical movement: “fresh” and cool(ed) air enters from the bottom (“false floor”), is directed towards the computer units to cool them down (if the incoming air is not fresh enough, it will need artificial cooling — 27°C seems to be the very upper limit before cooling but most operators use the 24-25°C limit or less) and then gets heated by the process of cooling the servers. Therefore lighter for the same volume, air moves upward and needs to be extracted, usually by mechanical means (fans, in the “false ceiling”). In bigger data centers, cool and hot “corridors” might be used instead of floors and ceilings, which define in return whole hot and cold sqm areas.

To help air flow through in a better way, cabling, furniture and architecture must facilitate its movement and not become obstacles by any means.

-

Wired (redundant)

Following the need for “Redundancy” (= which should guarantee to avoid downtimes as much as possible), the hardware parts need two different energy sources (plugs in the image), so as two different network accesses (rj45 plugs). In the case of big size data centers with TIER certification, these two different energy sources (literally two different providers) need to be backed up by two additional autonomous (oil) engines.

-

Wired (handling)

The more the hardware, the more the need to handle the cables. 19″ cabinets usually have enough free space on their sides and back, between the computing units and the metallic sides of the cabinet, so as enough handling parts to allow for massive cabling.

-

Redundant (RAID hardware)

One of the key concept of any data center / cloud computing architecture is an almost paranoid concern about redundancy (to at least double any piece of hardware, software system and stored data) so to avoid any losses or downtimes of the service. One question that could be asked here: do we really need to keep all these (useless) data? This concern about redundancy is especially expressed with hardware in the contemporary form of RAID architecture that assure the copy of any content on two hard disks that will work in parallel. If one gets down, the service is maintained and still accessible thanks to the second one.

In the case of the I&IC cloud server, we have 1 x 2Tb disk for the system only (Linux and ownCloud) –that isn’t mounted in RAID architecture, but should be to guarantee maximum “Uptime“–, 2 x 4Tb RAID disks for data, and 1 x 4Tb for local backup –that should be duplicated in a distant second location for security reasons– Note that our server has a size of 1U.

Note that the more the computing units will be running, the more the hardware will get hot and therefore the more the need for cooling.

Therefore…

Redundant bis (“2″ is a magic number)

All parts and elements generally come by two (redundancy): two different electric providers, two electric plugs, two Internet providers, two rjs 45 plugs, two backup oil motors, RAID (parallel) hard drives, two data backups, etc. Even two parallel data centers?

-

Virtualized (data) architecture

Servers don’t need to be physical anymore: virtualize them, manage them and install many of these digital machines on physical ones.

-

Interfaced

Any data center will need to be interfaced to manage its operation (both from an “end user” perspective, which will remain as “user friendly” as possible and from its “control room”). Our system remains basic and it just needs a login/passwd, a screen, a keyboard and mouse to operate, but it can become quite complicated… (googled here).

We’ve almost finished our tour and can now close back the box …

Yet let’s consider a few additional elements…

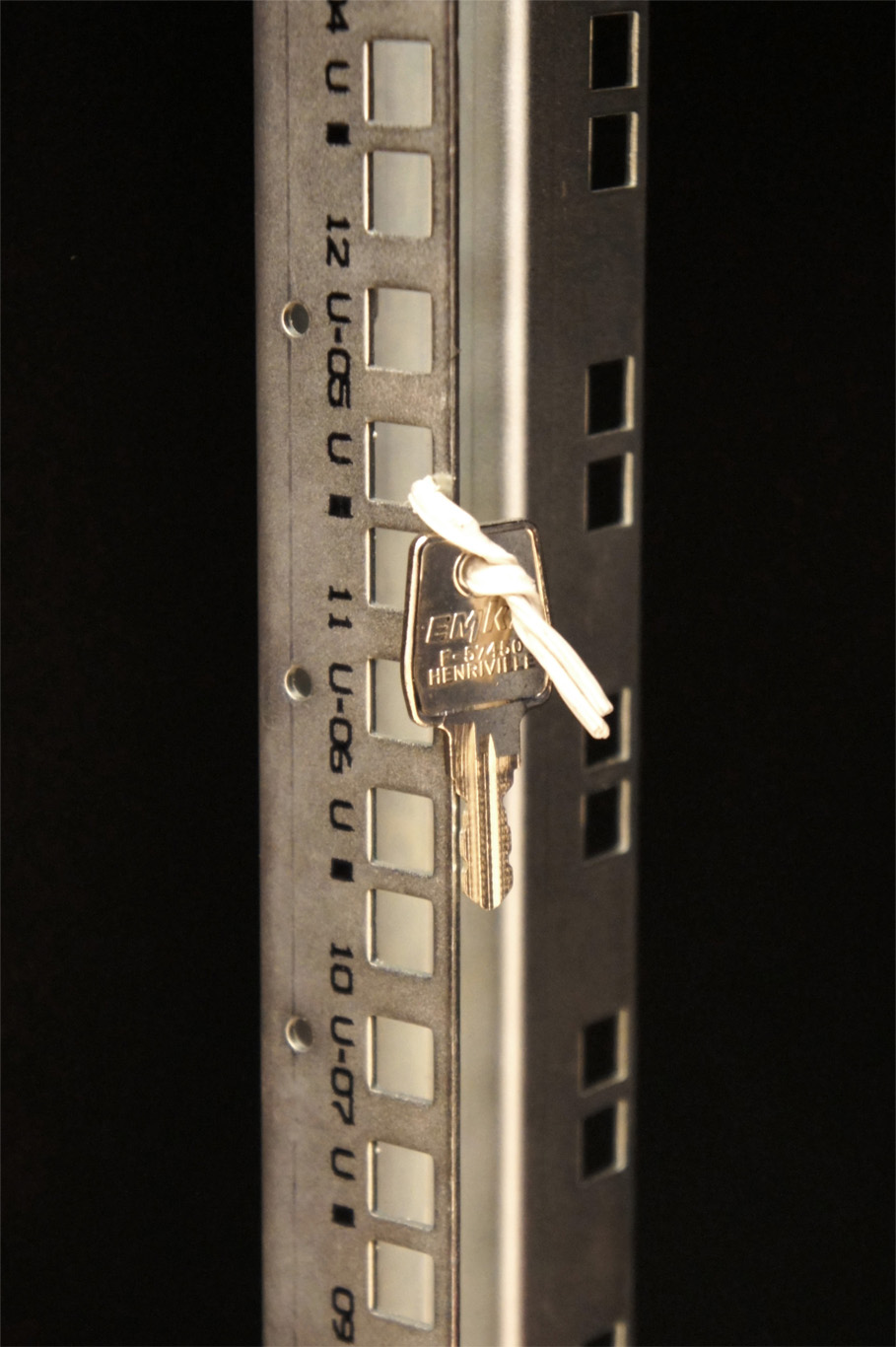

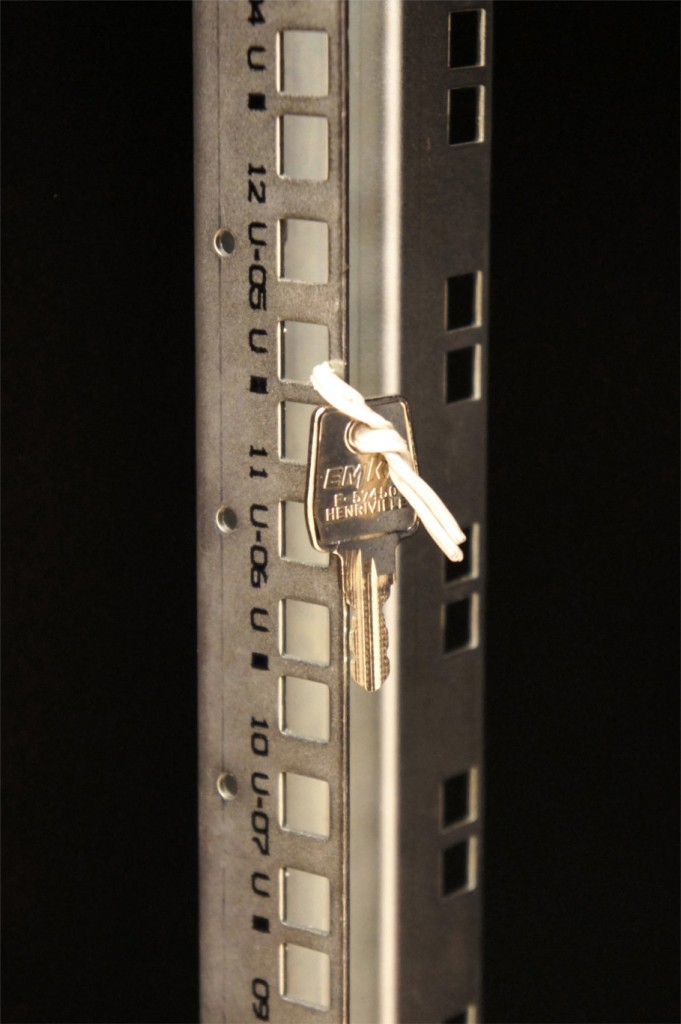

Controlled physical access

The physical accesses to the hardware, software, inner space and “control room” (interface) of the “data center” are not public and are therefore restricted. For this function too, a backup solution (a basic key in our case) must be managed.

Note that while we’re closing back the cabinet, we can underline one last time what looks to be obvious: the architecture or the casing is indeed used to secure the physical parts of the infrastructure, but its purpose is also to help filter the people who could access it. The “casing” is so to say both a physical protection and a filter.

-

Hidden or “Furtive”?

The technological arrangement is undoubtedly wishing to remain furtive, or at least to stay unnoticed. Especially when it comes to security issues.

-

Unexpressive, minimal

The “inexpressiveness” of the casing seems to serve two purposes.

The first one is to remain discreet, functional, energy efficient, inexpensive, normed and mainly unnoticed. This is especially true for the architecture of large data centers that could be almost considered as the direct scaling of the “black box” (or the shoe box) exemplified in this post. It could explain its usually very poor architecture.

The second one is a bit more speculative at this stage, but the “inexpressiveness” of the “black box” and the mystery that surrounds it remains the perfect support for technological phantasms and projections.

0 Comments